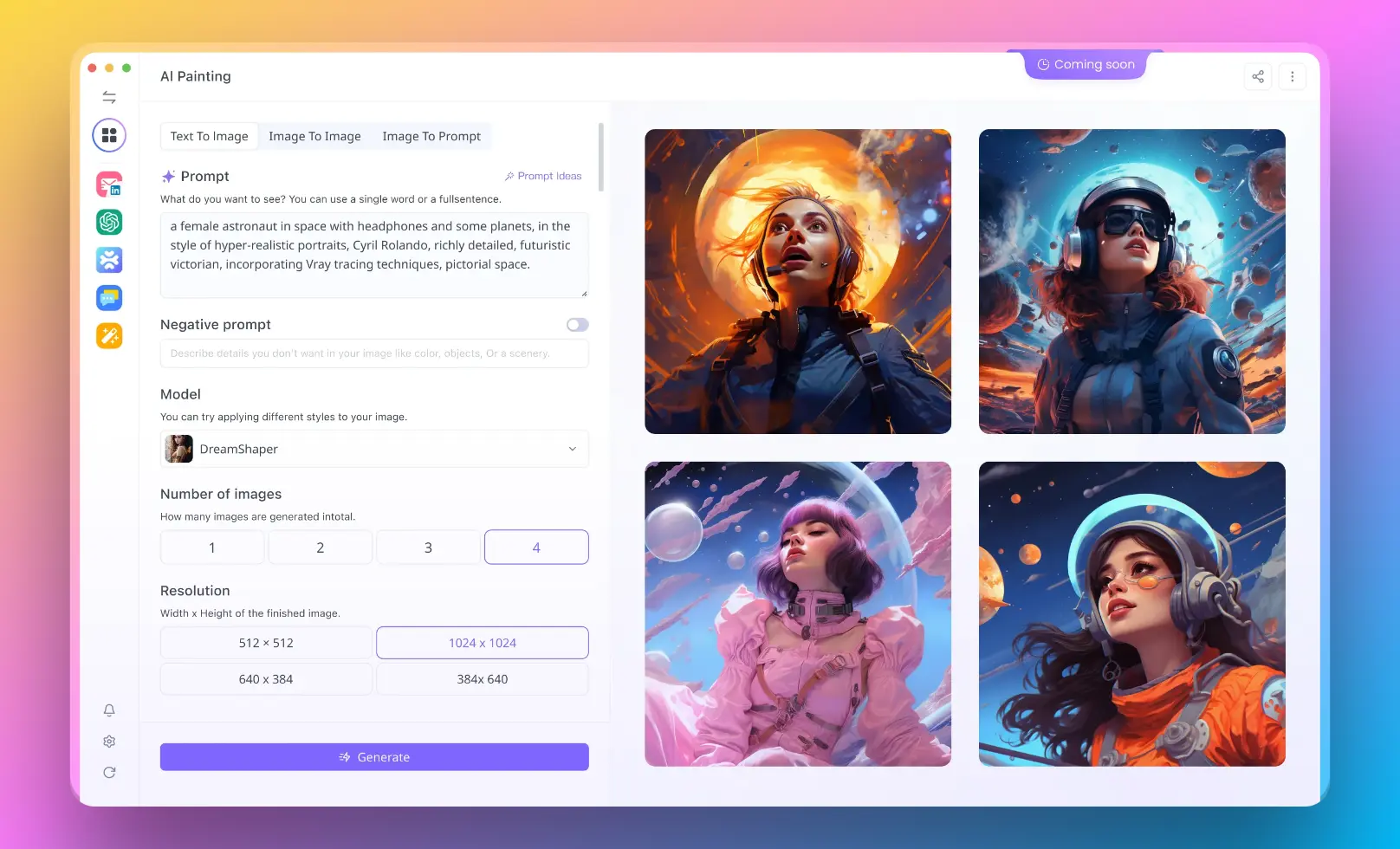

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Deepseek, OpenAI's o3-mini-high, Claude 3.7 Sonnet, FLUX, Minimax Video, Hunyuan...

Build Your Dream AI App within minutes, not weeks with Anakin AI!

Introduction to Llama 4: A Breakthrough in AI Development

Meta has recently unveiled Llama 4, marking a significant advancement in the field of artificial intelligence. The Llama 4 series represents a new era of natively multimodal AI models, combining exceptional performance with accessibility for developers worldwide. This article explores the benchmarks of Llama 4 models and provides insights into where and how you can use Llama 4 online for various applications.

The Llama 4 Family: Models and Architecture

The Llama 4 collection includes three primary models, each designed for specific use cases while maintaining impressive performance benchmarks:

Llama 4 Scout: The Efficient Powerhouse

Llama 4 Scout features 17 billion active parameters with 16 experts, totaling 109 billion parameters. Despite its relatively modest size, it outperforms all previous Llama models and competes favorably against models like Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1 across various benchmarks. What sets Llama 4 Scout apart is its industry-leading context window of 10 million tokens, a remarkable leap from Llama 3's 128K context window.

The model fits on a single NVIDIA H100 GPU with Int4 quantization, making it accessible for organizations with limited computational resources. Llama 4 Scout excels at image grounding, precisely aligning user prompts with visual concepts and anchoring responses to specific regions in images.

Llama 4 Maverick: The Performance Champion

Llama 4 Maverick stands as the performance flagship with 17 billion active parameters and 128 experts, totaling 400 billion parameters. Benchmark results show it outperforming GPT-4o and Gemini 2.0 Flash across numerous tests while achieving comparable results to DeepSeek v3 on reasoning and coding tasks—with less than half the active parameters.

This model serves as Meta's product workhorse for general assistant and chat use cases, excelling in precise image understanding and creative writing. Llama 4 Maverick strikes an impressive balance between multiple input modalities, reasoning capabilities, and conversational abilities.

Llama 4 Behemoth: The Intelligence Titan

While not yet publicly released, Llama 4 Behemoth represents Meta's most powerful model to date. With 288 billion active parameters, 16 experts, and nearly two trillion total parameters, it outperforms GPT-4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro on several STEM benchmarks. This model served as the teacher for the other Llama 4 models through a process of codistillation.

Llama 4 Benchmarks: Setting New Standards

Performance Across Key Metrics

Benchmark results demonstrate Llama 4's exceptional capabilities across multiple domains:

Reasoning and Problem Solving

Llama 4 Maverick achieves state-of-the-art results on reasoning benchmarks, competing favorably with much larger models. On LMArena, the experimental chat version scores an impressive ELO of 1417, showcasing its advanced reasoning abilities.

Coding Performance

Both Llama 4 Scout and Maverick excel at coding tasks, with Maverick achieving competitive results with DeepSeek v3.1 despite having fewer parameters. The models demonstrate strong capabilities in understanding complex code logic and generating functional solutions.

Multilingual Support

Llama 4 models were pre-trained on 200 languages, including over 100 with more than 1 billion tokens each—10x more multilingual tokens than Llama 3. This extensive language support makes them ideal for global applications.

Visual Understanding

As natively multimodal models, Llama 4 Scout and Maverick demonstrate exceptional visual comprehension capabilities. They can process multiple images (up to 8 tested successfully) alongside text, enabling sophisticated visual reasoning and understanding tasks.

Long Context Processing

Llama 4 Scout's 10 million token context window represents an industry-leading achievement. This enables capabilities like multi-document summarization, parsing extensive user activity for personalized tasks, and reasoning over vast codebases.

How Llama 4 Achieves Its Performance

Architectural Innovations in Llama 4

Several technical innovations contribute to Llama 4's impressive benchmark results:

Mixture of Experts (MoE) Architecture

Llama 4 introduces Meta's first implementation of a mixture-of-experts architecture. In this approach, only a fraction of the model's total parameters are activated for processing each token, creating more compute-efficient training and inference.

Native Multimodality with Early Fusion

Llama 4 incorporates early fusion to seamlessly integrate text and vision tokens into a unified model backbone. This enables joint pre-training with large volumes of unlabeled text, image, and video data.

Advanced Training Techniques

Meta developed a novel training technique called MetaP for reliably setting critical model hyper-parameters. The company also implemented FP8 precision without sacrificing quality, achieving 390 TFLOPs/GPU during pre-training of Llama 4 Behemoth.

iRoPE Architecture

A key innovation in Llama 4 is the use of interleaved attention layers without positional embeddings, combined with inference time temperature scaling of attention. This "iRoPE" architecture enhances length generalization capabilities.

Where to Use Llama 4 Online

Official Access Points for Llama 4

Meta AI Platforms

The most direct way to experience Llama 4 is through Meta's official channels:

- Meta AI Website: Access Llama 4 capabilities through Meta.AI web interface

- Meta's Messaging Apps: Experience Llama 4 directly in WhatsApp, Messenger, and Instagram Direct

- Llama.com: Download the models for local deployment or access online demos

Download and Self-Host

For developers and organizations wanting to integrate Llama 4 into their own infrastructure:

- Hugging Face: Download Llama 4 Scout and Maverick models directly from Hugging Face

- Llama.com: Official repository for downloading and accessing documentation

Third-Party Platforms Supporting Llama 4

Several third-party services are rapidly adopting Llama 4 models for their users:

Cloud Service Providers

Major cloud platforms are integrating Llama 4 into their AI services:

- Amazon Web Services: Deploying Llama 4 capabilities across their AI services

- Google Cloud: Incorporating Llama 4 into their machine learning offerings

- Microsoft Azure: Adding Llama 4 to their AI toolset

- Oracle Cloud: Providing Llama 4 access through their infrastructure

Specialized AI Platforms

AI-focused providers offering Llama 4 access include:

- Hugging Face: Access to models through their inference API

- Together AI: Integration of Llama 4 into their services

- Groq: Offering high-speed Llama 4 inference

- Deepinfra: Providing optimized Llama 4 deployment

Local Deployment Options

For those preferring to run models locally:

- Ollama: Easy local deployment of Llama 4 models

- llama.cpp: C/C++ implementation for efficient local inference

- vLLM: High-throughput serving of Llama 4 models

Practical Applications of Llama 4

Enterprise Use Cases for Llama 4

Llama 4's impressive benchmarks make it suitable for numerous enterprise applications:

Content Creation and Management

Organizations can leverage Llama 4's multimodal capabilities for advanced content creation, including writing, image analysis, and creative ideation.

Customer Service

Llama 4's conversational abilities and reasoning capabilities make it ideal for sophisticated customer service automation that can understand complex queries and provide helpful responses.

Research and Development

The model's STEM capabilities and long context window support make it valuable for scientific research, technical documentation analysis, and knowledge synthesis.

Multilingual Business Operations

With extensive language support, Llama 4 can bridge communication gaps in global operations, translating and generating content across hundreds of languages.

Developer Applications

Developers can harness Llama 4's benchmarked capabilities for:

Coding Assistance

Llama 4's strong performance on coding benchmarks makes it an excellent coding assistant for software development.

Application Personalization

The models' ability to process extensive user data through the 10M context window enables highly personalized application experiences.

Multimodal Applications

Develop sophisticated applications that combine text and image understanding, from visual search to content moderation systems.

Future of Llama 4: What's Next

Meta has indicated that the current Llama 4 models are just the beginning of their vision. Future developments may include:

Expanded Llama 4 Capabilities

More specialized models focusing on specific domains or use cases, building on the foundation established by Scout and Maverick.

Additional Modalities

While the current models handle text and images expertly, future iterations may incorporate more sophisticated video, audio, and other sensory inputs.

Eventual Release of Behemoth

As Llama 4 Behemoth completes its training, Meta may eventually release this powerful model to the developer community.

Conclusion: The Llama 4 Revolution

Llama 4 benchmarks demonstrate that these models represent a significant step forward in open-weight, multimodal AI capabilities. With state-of-the-art performance across reasoning, coding, visual understanding, and multilingual tasks, combined with unprecedented context length support, Llama 4 establishes new standards for what developers can expect from accessible AI models.

As these models become widely available through various online platforms, they will enable a new generation of intelligent applications that can better understand and respond to human needs. Whether you access Llama 4 through Meta's own platforms, third-party services, or deploy it locally, the impressive benchmark results suggest that this new generation of models will power a wave of innovation across industries and use cases.

For developers, researchers, and organizations looking to harness the power of advanced AI, Llama 4 represents an exciting opportunity to build more intelligent, responsive, and helpful systems that can process and understand the world in increasingly human-like ways.

from Anakin Blog http://anakin.ai/blog/where-to-try-llama-4-now-online/

via IFTTT

No comments:

Post a Comment