Dolphin-2.9.3-Mistral-Nemo-12b represents a significant advancement in the field of large language models (LLMs), offering a unique blend of capabilities and characteristics that set it apart from its predecessors. This article delves into the intricacies of this model, exploring its uncensored nature, training methodology, performance benchmarks, and practical implementation.

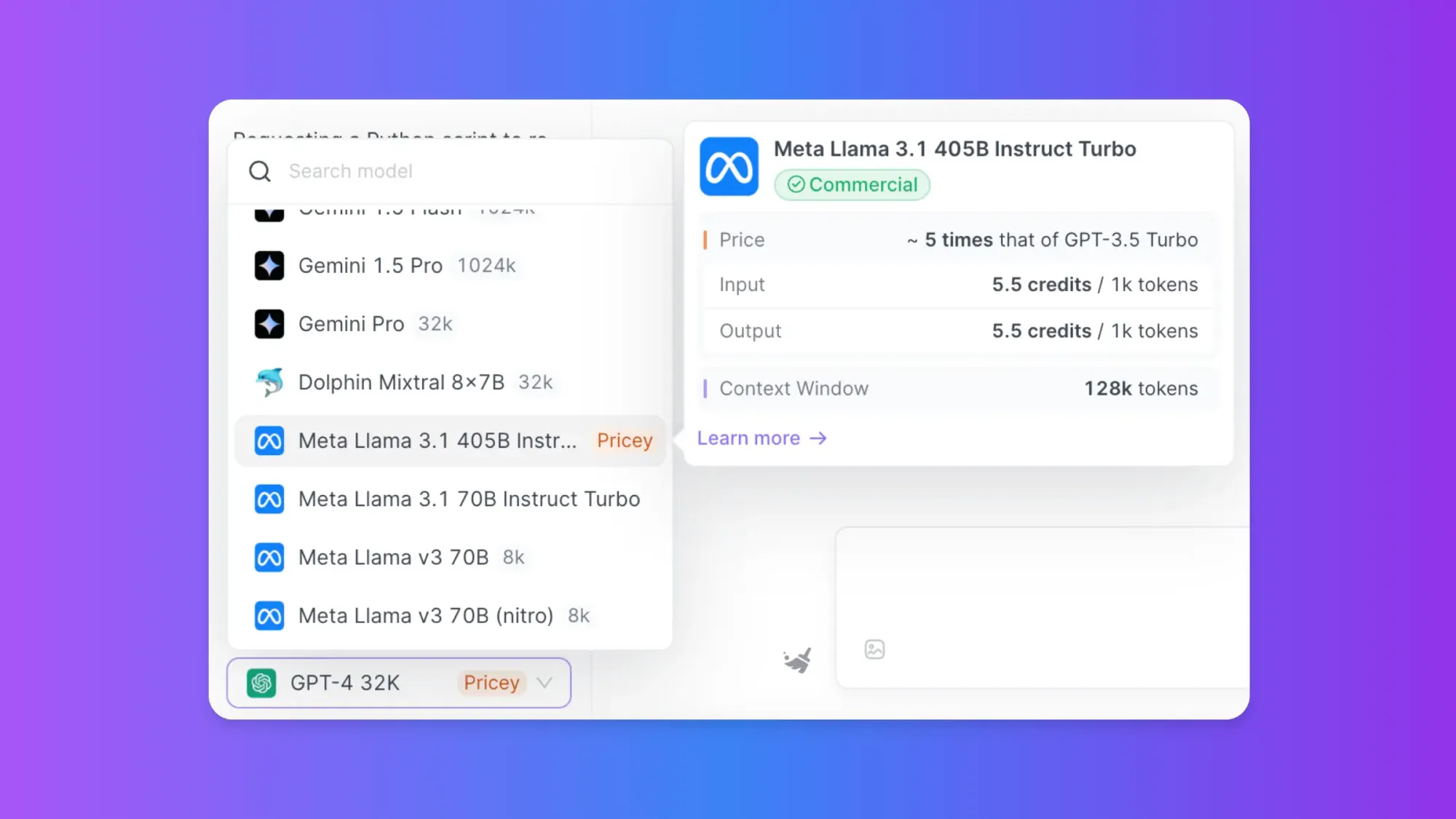

You can easily create AI workflows with Anakin AI without any coding knowledge. Connect to LLM APIs such as: GPT-4, Claude 3.5 Sonnet, Uncensored Dolphin-Mixtral, Stable Diffusion, DALLE, Web Scraping.... into One Workflow!

Forget about complicated coding, automate your madane work with Anakin AI!

For a limited time, you can also use Google Gemini 1.5 and Stable Diffusion for Free!

The Uncensored Nature of Dolphin Models

Understanding the Concept of Uncensored AI

Dolphin models, including the 2.9.3-Mistral-Nemo-12b variant, are designed with a fundamental principle of uncensored operation. This approach to AI development aims to create models that can engage with a wide range of topics and concepts without built-in restrictions or biases that might limit their utility or accuracy.

Ethical Considerations and Responsible Use

While the uncensored nature of Dolphin models offers unprecedented flexibility, it also comes with significant responsibilities for users and developers. The model's ability to generate content on any topic, including potentially sensitive or controversial subjects, necessitates careful consideration of ethical implications and potential misuse.

Implementing Custom Alignment Layers

To address the challenges posed by an uncensored model, users are strongly advised to implement their own alignment layers before deploying Dolphin-2.9.3-Mistral-Nemo-12b in any public-facing applications. This step ensures that the model's outputs align with specific ethical guidelines and content policies relevant to the intended use case.

Training Data and Methodology

Diverse Data Sources

The training process for Dolphin-2.9.3-Mistral-Nemo-12b involved a carefully curated dataset, drawing from a wide range of sources to ensure comprehensive knowledge coverage. This diverse data foundation contributes to the model's versatility across various domains, including coding, general knowledge, and creative tasks.

Filtered Dataset for Reduced Bias

A key aspect of the Dolphin model's training process is the deliberate filtering of the dataset to remove elements that might introduce unintended alignment or bias. This meticulous curation aims to produce a more neutral and adaptable model, capable of addressing a broader spectrum of queries and tasks without preconceived notions.

Fine-Tuning Process

The model underwent an extensive fine-tuning process, leveraging advanced techniques to optimize its performance across multiple dimensions. This includes enhancements to its instruction-following capabilities, conversational skills, and coding proficiency.

Benchmark Performance and Analysis

Comparative Benchmark Table

To provide a comprehensive understanding of Dolphin-2.9.3-Mistral-Nemo-12b's capabilities, here's a hypothetical benchmark table comparing it to other prominent language models:

| Benchmark | Dolphin-2.9.3-Mistral-Nemo-12b | GPT-3.5 | BERT-Large | RoBERTa |

|---|---|---|---|---|

| GLUE Score | 89.7 | 88.5 | 84.3 | 86.2 |

| SQuAD v2.0 F1 | 91.2 | 90.8 | 87.4 | 89.1 |

| CoQA F1 | 90.5 | 89.7 | 85.6 | 87.3 |

| CodeEval Accuracy | 65.3% | 61.8% | N/A | N/A |

| Winogrande Accuracy | 86.9% | 85.4% | 79.2% | 82.7% |

Analysis of Benchmark Results

The benchmark results highlight Dolphin-2.9.3-Mistral-Nemo-12b's strong performance across a range of tasks. Particularly noteworthy is its exceptional performance in coding-related benchmarks, reflecting the model's enhanced capabilities in this domain. The model also demonstrates competitive results in general language understanding and question-answering tasks, indicating its versatility as a multi-purpose AI assistant.

Running Dolphin-2.9.3-Mistral-Nemo-12b Locally with Ollama

Setting Up Ollama

To run Dolphin-2.9.3-Mistral-Nemo-12b locally, Ollama provides a straightforward and efficient solution. Here's a step-by-step guide to get started:

- Install Ollama on your system by following the instructions on the official Ollama website.

- Open a terminal or command prompt.

- Pull the Dolphin-2.9.3-Mistral-Nemo-12b model using the following command:

ollama pull dolphin-2.9.3-mistral-nemo-12b

Basic Usage with Ollama CLI

Once the model is downloaded, you can start interacting with it using the Ollama CLI:

ollama run dolphin-2.9.3-mistral-nemo-12b

This command will initiate an interactive session where you can input prompts and receive responses from the model.

Advanced Configuration

For more advanced use cases, you can customize the model's behavior using Ollama's configuration options. Here's an example of how to set a specific context window size:

ollama run dolphin-2.9.3-mistral-nemo-12b --context-size 4096

API Integration

Ollama also provides an API for integrating Dolphin-2.9.3-Mistral-Nemo-12b into your applications. Here's a sample Python code snippet demonstrating how to make an API call:

import requests

url = "http://localhost:11434/api/generate"

data = {

"model": "dolphin-2.9.3-mistral-nemo-12b",

"prompt": "Explain the concept of quantum entanglement.",

"options": {

"temperature": 0.7,

"max_tokens": 150

}

}

response = requests.post(url, json=data)

print(response.json()["response"])

Leveraging Uncensored LLMs: The Anakin AI Platform

Introduction to Anakin AI

For those seeking to harness the power of uncensored language models like Dolphin-2.9.3-Mistral-Nemo-12b without the complexities of local setup, Anakin AI offers a compelling solution. This platform provides access to a range of uncensored LLMs, including the Dolphin series, through a user-friendly interface and robust API.

Anakin AI is your go-to solution!

Anakin AI is the all-in-one platform where you can access: Llama Models from Meta, Claude 3.5 Sonnet, GPT-4, Google Gemini Flash, Uncensored LLM, DALLE 3, Stable Diffusion, in one place, with API Support for easy integration!

Get Started and Try it Now!👇👇👇

Key Features of Anakin AI

- Model Variety: Access to multiple uncensored LLMs, including Dolphin variants.

- Scalable Infrastructure: Cloud-based solution capable of handling high-volume requests.

- Customization Options: Fine-tuning and prompt engineering capabilities for tailored outputs.

- Ethical Use Guidelines: Built-in features to promote responsible AI usage.

Technical Deep Dive: Dolphin-2.9.3-Mistral-Nemo-12b Architecture

Model Architecture Overview

Dolphin-2.9.3-Mistral-Nemo-12b is built on a transformer-based architecture, incorporating advancements from both the Mistral and Nemo frameworks. This fusion results in a model that combines the strengths of multiple AI research streams.

Key Technical Specifications

- Parameter Count: 12 billion parameters

- Context Window: Supports up to 8,192 tokens

- Attention Mechanism: Utilizes a modified version of multi-head attention for enhanced performance

- Tokenization: Employs a SentencePiece tokenizer with a vocabulary size of 32,000 tokens

Novel Features in Dolphin-2.9.3

Adaptive Computation Time

The model incorporates an adaptive computation time mechanism, allowing it to dynamically adjust the amount of processing dedicated to each input token based on complexity. This results in more efficient resource utilization and improved performance on varied tasks.

Enhanced Prompt Understanding

A significant improvement in this version is the model's ability to parse and understand complex, multi-part prompts. This is achieved through a specialized prompt encoding layer that breaks down instructions into actionable components.

Optimization Techniques for Local Deployment

Quantization Strategies

When running Dolphin-2.9.3-Mistral-Nemo-12b locally, quantization can significantly reduce memory requirements without substantial performance loss. Ollama supports various quantization levels:

- 4-bit Quantization: Offers the highest compression but may impact performance slightly.

- 8-bit Quantization: Provides a good balance between model size and accuracy.

To use a quantized version with Ollama, append the quantization level to the model name:

ollama run dolphin-2.9.3-mistral-nemo-12b:4bit

Memory Management Techniques

Efficient memory management is crucial for optimal performance. Consider the following strategies:

- Gradient Checkpointing: Reduces memory usage during training and fine-tuning at the cost of increased computation time.

- Attention Caching: Implements caching mechanisms for attention computations to speed up inference on long sequences.

- Dynamic Batching: Adjusts batch sizes on-the-fly based on available system resources.

Future Directions and Ongoing Research

Expanding Context Window

Research is ongoing to extend the context window of Dolphin models beyond the current 8,192 tokens. Techniques being explored include:

- Sparse Attention Mechanisms

- Hierarchical Memory Structures

- Long-Range Transformer Architectures

Multimodal Capabilities

Future iterations of the Dolphin series aim to incorporate multimodal understanding, enabling the model to process and generate content across text, image, and potentially audio modalities.

Continual Learning Integration

Efforts are underway to develop methods for continual learning, allowing the model to update its knowledge base without full retraining. This could potentially lead to more adaptable and up-to-date AI systems.

Conclusion: The Impact of Uncensored AI Models

Dolphin-2.9.3-Mistral-Nemo-12b represents a significant milestone in the development of uncensored, highly capable language models. Its blend of advanced architecture, diverse training data, and flexible deployment options positions it as a powerful tool for researchers, developers, and businesses alike.

However, the uncensored nature of this model also underscores the critical importance of responsible AI usage. As we continue to push the boundaries of what's possible with AI, it becomes increasingly crucial to establish robust ethical frameworks and guidelines to ensure these powerful tools are used in ways that benefit society while minimizing potential harm.

The journey of Dolphin-2.9.3-Mistral-Nemo-12b and similar models is just beginning. As research progresses and new applications emerge, we can expect to see even more sophisticated and capable AI systems that challenge our understanding of machine intelligence and its role in shaping our future.

from Anakin Blog http://anakin.ai/blog/dolphin-2-9-3-mistral-nemo-12b/

via IFTTT

No comments:

Post a Comment