In the bustling realm of artificial intelligence, a groundbreaking symphony of technology known as Apple's Ferret is turning heads and dropping jaws. That's right, folks! This isn't just another AI; it's the state-of-the-art Multimodal Large Language Model developed by none other than Apple, and it's here to change the game. Let's get a panoramic view of this sensational tech that’s getting everyone talking.

How Apple Created Ferret, MultiModel LLM Breakthrough

Apple isn't just about sleek devices; they're pioneers on the AI frontier, conjuring up something downright magical with Ferret.

Ferret a novel Multimodal Large Language Model (MLLM),

- Apple’s AI/ML team joined forces with researchers from Columbia University to create Ferret.

- Hybrid Region Representation: A pioneering approach combining coordinate-based and feature-based region representation.

- Spatial-aware Visual Sampler: A mechanism capable of handling the complexity and sparsity of different regional shapes within images.

- Apple trained this based on Vicuna, a popular open source LLM.

- Ferret has emerged as a solution to a long-standing challenge in the field of vision-language learning: spatial understanding.

To address this breakthrough, picture this: you're leafing through your digital photo album, and a question pops into your head,

"What's that quirky statue in my Rome trip pic?"

Ask Ferret, and you'll be gobsmacked because it'll not only tell you that it's the Statue of Pasquino but also draw a circle around it. Yes, this AI is like having a professional guide sitting in your computer!

How Ferret Works

Ferret's functional architecture hinges upon three critical components:

- Image Encoder: Used for extracting image embeddings to be processed further.

- Spatial-Aware Visual Sampler: It is adept at extracting continuous visual features from regions in all shapes by considering varying sparsity.

- Large Language Model (LLM): Ties together both image and text embeddings, along with region features, interpreting them as a cohesive unit.

Ferret accepts a sophisticated input scheme:

- A question referencing a region in the image.

- Coordinates and a special placeholder token representing the region are embedded alongside text.

- Supports input refrains ranging from singular points and bounding boxes to more complex polygons or free-form shapes.

Benchmarks and Evaluations

Ferret underwent extensive evaluations on multiple fronts aiming to ascertain its efficacy:

- Referring Object Classification: Understood as the ability to correctly recognize an object referred to within an image.

- Visual Grounding: The capability of the model to connect textual descriptions accurately to image regions.

- Grounded Captioning: The generation of image captions that also include localized region descriptions.

Comparing Apple's Ferret model to other recent MLLMs:

Source: https://arxiv.org/pdf/2310.07704.pdf

GitHub Page: https://github.com/apple/ml-ferret

The table above compares Ferret to recent Multimodal Large Language Models (MLLMs) with a focus on spatial awareness. Key observations include:

- Input Types: Ferret supports point, box, and free-form inputs, a capability lacking in most other models like BuboGPT and Vision-LLM.

- Output Grounding: All models, except GPT4-ROI and PVIT, support output grounding.

- Data Construction: Most models use conventional and GPT-generated data. Only Ferret and Shikra incorporate data for robustness.

- Quantitative Evaluation: Ferret is unique in its ability to be quantitatively evaluated for referring/grounding with chat.

How to Run Apple's Ferret Model

To use the Ferret Model and replicate its success, here's a step-by-step guide to train and run the model on your own environment:

Prerequisites

- A machine with at least 1 NVIDIA GPU (preferably A100 80GB for optimal performance).

- Minimum software requirements include Python 3.10, CUDA-enabled deep learning libraries, and the OpenAI package for evaluation.

Installation Steps

Clone the Repository:

git clone https://github.com/apple/ml-ferret

cd ml-ferret

Create and Activate Virtual Environment:

conda create -n ferret python=3.10 -y

conda activate ferret

Install Required Packages:

pip install --upgrade pip

pip install -e .

pip install pycocotools protobuf==3.20.0

Training Process

Prepare Vicuna Checkpoint: Download Vicuna's weights based on provided instructions.

Download and Apply Offsets: Retrieve prepared offsets of weights for desired model size (e.g., 7B, 13B), unzip them, and apply to Vicuna's weight.

python3 -m ferret.model.apply_delta \

--base ./model/vicuna-7b-v1-3 \

--target ./model/ferret-7b-v1-3 \

--delta path/to/ferret-7b-delta

Run Training Script: Utilize scripts provided in the repository for the corresponding model size.

Sample Training Code

# Launch Controller

python -m ferret.serve.controller --host 0.0.0.0 --port 10000

# Launch Gradio Web Server

python -m ferret.serve.gradio_web_server --controller http://localhost:10000 --model-list-mode reload --add_region_feature

# Launch Model Worker

CUDA_VISIBLE_DEVICES=0 python -m ferret.serve.model_worker --host 0.0.0.0 --controller http://localhost:10000 --port 40000 --worker http://localhost:40000 --model-path ./checkpoints/FERRET-13B-v0 --add_region_feature

How to Evaluate Ferret

Evaluating Ferret involves several scripts found in the repository. Instructions for each script's usage can be found at the top of the corresponding files.

Evaluation Domains

- Ferret-Bench: Run using

gpt4_eval_script.shwith OpenAI key. - LVIS-Referring Object Classification: Follow steps in

model_lvis.pyand theneval_lvis.py. - RefCOCO/RefCOCO+/RefCOCOg: Utilize

model_refcoco.pyfollowed byeval_refexp.py. - Flickr: Use

model_flickr.pyand proceed witheval_flickr_entities.py. - POPE: Apply

model_pope.pyand theneval_pope.pyfor hallucination evaluation.

Sample Evaluation Code

# For RefCOCOg Evaluation

python ferret/eval/model_refcoco.py --checkpoint_dir path/to/checkpoint --output_dir path/to/output --batch_size 16

python ferret/eval/eval_refexp.py --preds_file path/to/output/predictions.json --ground_truth_file path/to/gt.json

The Ferret Model stands testimony to Apple's commitment to advancing AI and offering groundbreaking solutions with practical applications in multimodal conversational interfaces. It represents an impressive step forward in the seamless bridging of complex NLP and image processing tasks within a single, robust framework.

The GRIT Dataset: What Ferret is Trained On

In the diverse landscape of machine learning datasets, Apple’s Ground-and-Refer Instruction-Tuning (GRIT) dataset is a gem. Far from being another mundane compilation of images and text, GRIT is meticulously crafted, comprising a mammoth 1.1 million samples, each sparkling with rich hierarchical spatial knowledge. Let's break down this colossal dataset that trains Ferret to be the smart Ferret model it is.

The GRIT dataset is not your run-of-the-mill machine learning dataset. It's a treasure trove meticulously segmented into various layers of complexity, ensuring Ferret possesses both depth and breadth in its understanding.

Objects and Granularity: GRIT doesn't stop at mere object identification; it delves into the relationship between objects, their region descriptions, and the associated reasoning based on spatial knowledge.

Task Diversity: It addresses several task formats: Predominantly, text-in-region-out, region-in-text-out, and a mix of both.

Negatives for Robustness: To foil any potential AI gullibility, GRIT incorporates negative sample mining, beefing up Ferret’s ability to discern the absent from the present.

The introduction of GRIT propels Ferret into a league of its own.

Robust Conversations: With GRIT, conversations with Ferret are dynamic, where users can refer and ground objects within images, mimicking human interactions accurately.

Open-Vocabulary Training: GRIT ensures Ferret isn’t just an AI stuck with fixed phrases; it can weave talk and imagery fluidly like a well-read scholar.

Layered, But Smarter

Envision a dataset that doesn't only test the AI’s simple 'see-and-tell' but challenges it to reason, relate, and robustly comprehend complex spatial narratives.

Visual Genome Treasure: At its core, GRIT leverages datasets like Visual Genome for constructing hierarchies that span from objects to relationships and regional descriptions.

Instruction Following: Unlike aimless data, GRIT stands out with its instruction-following dataset, trained to follow commands like a well-taught cadet.

GPT-generated: To simulate the creative fluidity of human conversation, part of GRIT is spun from the yarn of OpenAI’s GPT models, adding a conversational touch.

Spatial Negative Mining: By adopting image-and-semantics conditioned negative mining strategies, GRIT stands as the spartan shield against the Achilles’ heel of object hallucination.

Practical Applications of Apple's Ferret Model

The development of Apple's Ferret Model is not just a technical exercise; it has real-world implications. It advances our interactions with technology in unprecedented ways, promising seamless integration into various applications that can benefit from enhanced multimodal understanding. Here are a few potential applications:

- Enhanced Image Searching: Allowing users to search for images or objects within images using natural language and spatial references.

- Assistive Technology: Helping visually impaired individuals understand their surroundings by describing scenes and objects.

- Educational Tools: Enabling interactive learning experiences where students can ask detailed questions about visual content.

- Robotics: Assisting robots in understanding commands that involve interacting with objects in their surroundings.

Such applications underscore the transformative potential of the Ferret Model in shaping the future of human-computer interaction.

Future Directions for Ferret

Apple’s Ferret is an evolving project with potential enhancements, including:

- Increasing Model Size: Even larger models may yield better performance, given the current trend in deep learning.

- Broader Dataset Collection: Expanding the dataset to include more varied and complex scenarios could improve the model's robustness.

- Cross-modal Learning: Integrating other modalities like audio or tactile inputs could further enrich the model’s capabilities.

Conclusion

Apple's Ferret Model stands as a testament to the progress in multimodal machine learning. Merging language, vision, and spatial understanding into a cohesive model has paved the way for impressive new functionalities. The careful consideration of data, robust training frameworks, and thoughtful benchmarks reflect the meticulous approach behind Ferret.

Intrigued? You bet! Ferret isn’t just bridging gaps; it’s closing them with a snap of its AI fingers. This isn't just another AI discourse; it’s a glimpse into a future where asking and seeing blend gracefully, all thanks to Apple.

Taking on the AI conundrum head-on, Ferret stands as a testament to Apple’s unrelenting passion for innovation with elegance. Stay tuned, for who knows what marvel Apple's savvy squad will whip up next?

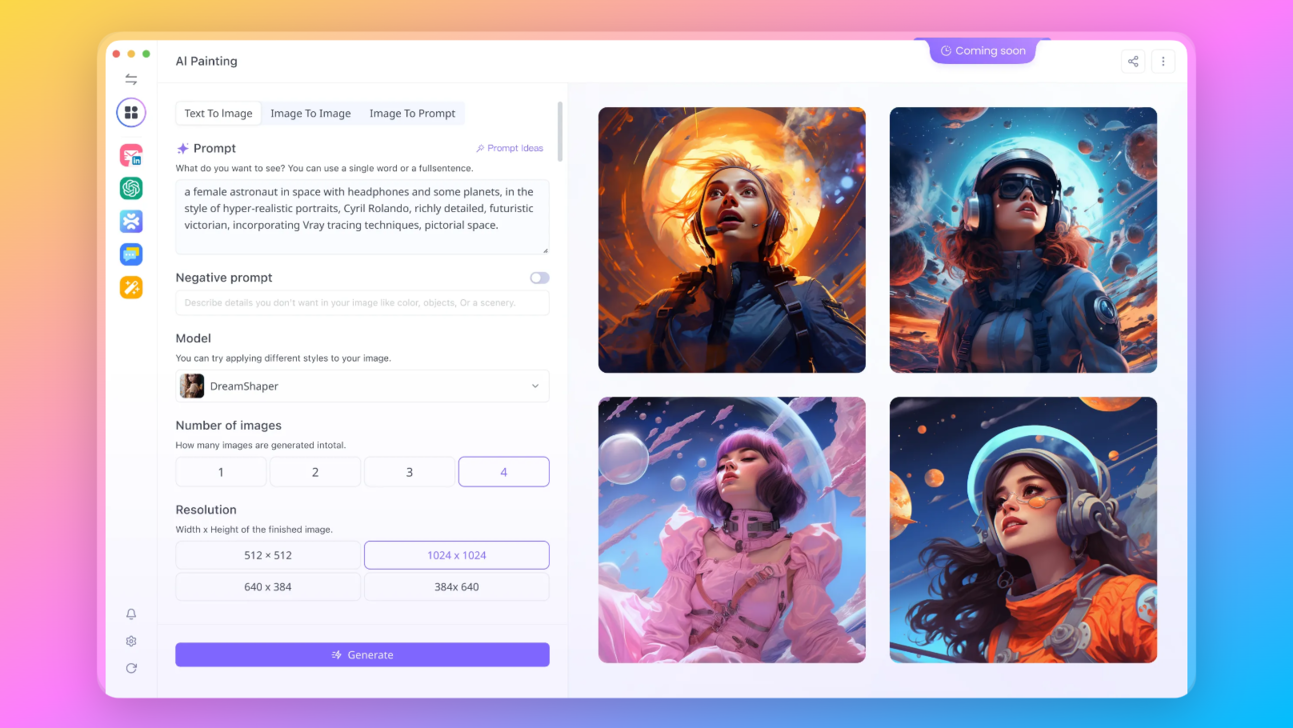

Want to test out all these awesome LLMs online? Try Anakin AI!

Anakin AI is one of the most convenient way that you can test out some of the most popular AI Models without Here are the other Open Source and Free Models that Anakin AI supports:

- Mistral 7B and 8x7B: the hottest names for Open Source LLMs!

- Dolphin-2.5-Mixtral-8x7b: get a taste the wild west of uncensored Mixtral 8x7B!

- OpenHermes-2.5-Mistral-7B: One of the best performing Mistral-7B fine tune models, give it a shot!

- OpenChat, now you can build Open Source Lanugage Models, even if your data is imperfect!

Other models include:

- GPT-4: Boasting an impressive context window of up to 128k, this model takes deep learning to new heights.

- Google Gemini Pro: Google's AI model designed for precision and depth in information retrieval.

- DALLE 3: Create stunning, high-resolution images from textual descriptions.

- Stable Diffusion: Generate images with a unique artistic flair, perfect for creative projects.

Want to test out all these awesome LLMs online? Try Anakin AI!

Anakin AI is one of the most convenient way that you can test out some of the most popular AI Models without downloading them!

from Anakin Blog http://anakin.ai/blog/ferret-apple/

via IFTTT

No comments:

Post a Comment